There has been much discussion about the many benefits of “moving testing left,” and our experts will tell you that doing so by having automated testing (Quality Gates) integrated into your build pipelines is a critical success factor for the rapid build and deploy process automation necessary to truly reap the benefits of Agile. That said, there are significant costs to the organization for implementing automated Quality Gates, which must be weighed carefully and optimized to retain positive Return On Investment (ROI) for the implementation.

Quality Gates are based upon the stage-gate system initially presented in 1986 and originally applied to quality control processes in the automotive industry. The concept is simple: you have tests, or gates, that validate each step in your overall process. If the step passes the test, the process proceeds to the next step. Upon failure, the process is stopped and corrective actions are initiated to identify and resolve the issue. Cost savings are increased if defects are found and corrected earlier in the process. Note: It is not necessary for the gates to be serial, and in fact you will likely need to run parallel processes to remain within time constraints for the overall process (more on this idea later).

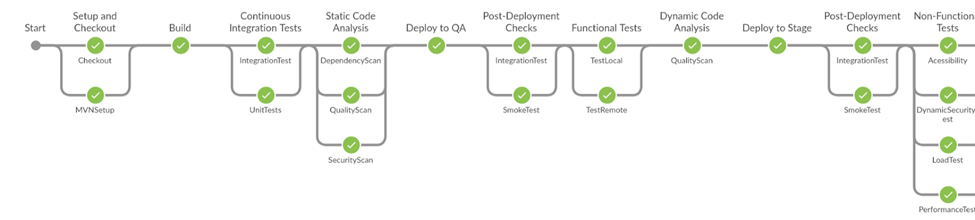

On my most recent client project, we helped them incrementally implement automated Continuous Integration and Continuous Delivery (CI/CD) pipelines (Jenkins Pipeline in multi-branch mode) that not only performed build and deployment, leveraging Nexus build artifact repos, Nginx, Kafka, Redis, etc. but also executed unit testing, static analysis, security scanning (Fortinet). We eventually also added dynamic and performance tests (Jmeter and Gridlastic) by leveraging test automation we built for regression, performance, and portability testing. The results were astounding: we identified numerous bugs with every build before expending testing time and energy on defective builds. The resulting time savings and increases in build quality provided more efficiency in the testing process, new opportunities for increased testing coverage, and a resulting product that was more reliable and had increased quality.

So, all of this sounds great so far, right? What is the catch? It’s a common saying in product development that “you can have it good, you can have it fast, and you can have it cheap… Pick any two.” What we have done here is focus on “good and fast”, but there is a cost that comes in several forms, including not only the build out time, but also in the form of complexity and time, which has direct impacts to your development staff and process, and Cloud server resources.

Building and deploying software systems is a complex process involving a multitude of steps that all must be done correctly for a successful outcome. You cannot decrease the processes that have to happen, and your code base is growing daily, so to get the process completed more quickly, you need to both automate the build (for repeatability) and deployment process steps and have more steps executed at the same time (parallelism). Building the scripts to automate build and deployment is a complex undertaking that requires specialized tools and skills. Building parallelism into the pipelines is an additional layer of complexity. (see image below)

On our recent client project, in order to both enable parallelization in build and test execution, and reduce time needed to spin up temporary services needed to accomplish the pipeline tasks, we leveraged containerization (Docker containers on Kubernetes clusters and pods) and AWS Cloud Formation templates. Time became a significant constraint, as every test (or Quality Gate) we added resulted in increased pipeline execution time, which in turn cost more developer wait time. The additional complexity of parallelization had another dimension as well: debugging any build failures became much harder, as a direct result of the implementation of build automation and parallelism that had been introduced to the pipelines to increase efficiency. Developers had to be trained on the pipeline architecture and tooling to be able to debug build and test failures. Tools had to be configured to allow log file access, and logging strategies developed to balance what needed to be logged and retained versus space and time needed for creating and storing the logs.

Having lived this project, I offer some recommendations to successfully implement Quality Gates, to include:

- Careful and deliberate decisions with respect to which tests (or Quality Gates) to include in your process, and regarding balancing the ROI against the cost, time and complexity;

- Coordinating incremental change, and leading the rollout of each change with training as the first step; and

- Embedding DevOps engineers into your development scrum teams to increase communication and collaboration, and to provide the necessary support developers will need in both understanding the process, and debugging issues that arise;

In our case we implemented the base pipelines repo by repo, training and supporting each of the affected development teams as we rolled out the changes. As we added new steps and Quality Gates, we repeated the cycle of training and incremental rollout, minimizing disruption and developer down time, while incrementally improving the pipelines.

Change is constant, so set up your teams and processes to encourage collaboration and to improve overall quality.