In my past articles I’ve written about using custom listeners as part of getting desired reporting, or making your tests do what you want. I’ve always referred offhandedly to these listeners, never paying them direct attention. I figured it was finally time to actually write a full fledged post about listeners, and some useful tricks.

What is a Listener?

Most testing frameworks (JUnit, TestNG, Cucumber, Robot…) have what they call a ‘listener.’ This is a class with methods that runs before or after your tests, and has hooks into reporting and logging. It works within the framework to identify what passes, what fails, and maybe even why. Sometimes, these also include how and when tests are executed. Understanding how these listeners work can add additional insight into how your tests run. Understanding how these listeners can be modified can allow you greater control over your tests.

For this post, I’m going to focus on the listener class for TestNG, how to override it, and how to implement your changes. Many of the methods discussed are similar in other frameworks, and the override pattern is the same, so it should be an easy exercise to convert from these examples to ones specific to you.

Custom Functionality

In TestNG, the default listener class is named TestListenerAdapterand provides some basic before and after hooks for your tests. This can be thought of as similar to the annotations TestNG provides, such as @BeforeSuiteand @AfterSuiteand @BeforeTestand @AfterTest. These, however, provide more details about the scenario. Another important note is that these are typically defined as global, and so any changes made to these methods impacts all tests run anywhere and everywhere.

To create your own listener, simply define a class that extends the default listener, and add whatever desired functionality into that new class

package com.coveros.utilities;

public class Listener extends TestListenerAdapter {

}

Starting simple, let’s say we wanted some additional logging for knowing when tests are starting their execution. To accomplish this, we’d simply want to override the onTestStartmethod. This could look something like:

/**

* Before each test runs, write out to the log to show the test is running

*

* @param result the TestNG test being executed

*/

@Override

public void onTestStart(ITestResult result) {

super.onTestStart(result);

log.info("Starting test " + result.getInstanceName());

}

Pretty simple right? All this method does is call the parent method it’s overriding to ensure no functionality is dropped, and then does some extra logging. That’s a good start, but we can do better.

Additional Logging

Maybe we have our tests linked to some external Test Management System. If that is the case, perhaps we want to update our system to indicate that the test case ran, and what it’s success status was. Luckily there are a few existing methods in the listener for us to use. One option is to reach out via our Test Management System API and update the test case after each test case runs.

/**

* Runs the default TestNG onTestFailure, and adds additional information

* into the testng reporter

*/

@Override

public void onTestFailure(ITestResult result) {

super.onTestFailure(result);

log.error(result.

getInstanceName() + " failed.");

String executionId = recordTest(result);

zephyr.markExecutionFailed(

executionId);

}

There is a little bit of magic hand-waving there, but in essence, each time a test case fails, we write out the error, record the result, and then update our run. In this case, we’re using a custom Zephyr object and class, as Zephyr is our Test Case Management System.

If we didn’t like this approach, we could try a bulk approach. Our listener has some methods to retrieve all tests, and even subsets based on their results. So, instead we could modify a method that runs after all tests are completed.

/**

* Runs the default TestNG onFinish, and adds additional information

* into the testng reporter

*/

@Override

public void onFinish(ITestContext context) {

super.onFinish(context);

List<String> executionIds = recordTests(getFailedTests());

zephyr.markExecutionsFailed(

executionIds);

zephyr.markExecutionsPassed(

recordTests(getPassedTests()))

;

}

Here, we’re doing similar to the above, except that we’re doing it in bulk, making all of our changes at the end of our test suite run. There are pros and cons to each pattern. Based on your system’s bandwidth, speed, and availability, you’ll want to make the appropriate choice.

Skipping Tests

Not only can we add additional logging, but we can also setup special conditions to avoid executing tests. For example, maybe you are using feature toggles, and due to the deployment, you don’t want to test a certain feature. Well, we can use our custom listener to handle this.

A great option is the ability to skip the test if the feature is not turned on. You can use the onTestStartmethod (as seen before), to see if the feature is disabled. If the test is related to that feature, then you can decide to skip the test. For example:

/**

* Before each test runs, check to determine if the corresponding module, based on the scenario tags, is turned

* on or off. If the module is turned off, skip those tests

*

* @param result the TestNG test being executed

*/

@Override

public void onTestStart(ITestResult result) {

for (Feature.Features feature : Feature.Features.values()) {

if (result.getMethod().getGroups(

).contains(feature.name()) && !Feature.isFeatureEnabled(

feature)) {

log.warn("Skipping " + result.getInstanceName() + ", as feature " + feature + " is disabled");

result.setStatus(ITestResult.SKIP);

throw new SkipException("Skipping as feature " + feature + " is disabled.");

}

}

}

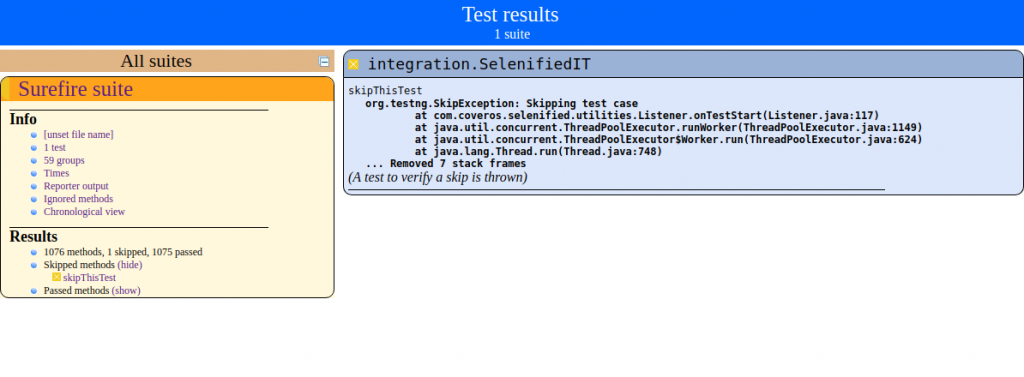

This works seamlessly with the test execution, and even shows which tests passed, failed, and were skipped as a result of the feature toggles.

Full TestNG Listener Example

package com.coveros.utilities;

public class Listener extends TestListenerAdapter {

private static final Logger log = Logger.getLogger(Listener.class);

/**

* Before each test runs, write out to the log to show the test is running

*

* @param result the TestNG test being executed

*/

@Override

public void onTestStart(ITestResult result) {

for (Feature.Features feature : Feature.Features.values()) {

if (result.getMethod().getGroups(

).contains(feature.name()) && !Feature.isFeatureEnabled(

feature)) {

log.warn("Skipping " + result.getInstanceName() + ", as feature " + feature + " is disabled");

result.setStatus(ITestResult.SKIP);

throw new SkipException("Skipping as feature " + feature + " is disabled.");

}

}

super.onTestStart(result);

log.info("Starting test " + result.getInstanceName());

}

/**

* Runs the default TestNG onFinish, and adds additional information

* into the testng reporter

*/

@Override

public void onFinish(ITestContext context) {

super.onFinish(context);

List<String> executionIds = recordTests(getFailedTests());

zephyr.markExecutionsFailed(executionIds);

zephyr.markExecutionsPassed(recordTests(getPassedTests()));

}

/**

* Runs the default TestNG onTestFailure, and adds additional information

* into the testng reporter

*

* @param result the TestNG test being executed

*/

@Override

public void onTestFailure(ITestResult result) {

super.onTestFailure(result);

log.error(result.

getInstanceName() + " failed.");

}

}

Implementing Custom Listener

The last step is actually getting our custom listeners to run, instead of the default listener. This is greatly dependent on the framework, but in most cases, pretty straightforward.

TestNG

For TestNG, to use your custom listener, you have a few options. The simplest, is just to put it in your test class. Add the annotation above the class declaration, and BAM! your custom listener will execute instead.

package com.coveros;

@Listeners({com.coveros.utilities.Listener.class})

public class MyTestsIT {

}

This can be a bit cumbersome if you have a lot of test classes that each want the same custom listener (which is typically the desired outcome). Instead, I prefer to create a base class that is annotated with this listener and some other common methods (starting browsers, creating test data). Then I have each of my test classes extend that base class. In this way, they each inherit the listener, without have to explicitly state it.

Another option is rely on your build tool instead of the tests themselves. If you’re using Maven, or Gradle, or even Ant, each of these tools allows you to specify a custom listener. For TestNG, it’s as simple as adding a property into the block that executes your tests.

Maven

<plugin>

<groupId>org.apache.maven.

plugins</groupId>

<artifactId>maven-failsafe-

plugin</artifactId>

<version>3.0.0</version>

<configuration>

<properties>

<property>

<name>listener</name>

<value>com.coveros.utilities.

Listener</value>

</property>

</properties>

</configuration>

</plugin>

GRADLE

task verify(type: Test) {

useTestNG() {

useDefaultListeners = true

}

options {

listeners.add("com.coveros.utilities.Listener")

}

}

ANT

<target name="verify" depends="compile">

<java classpathref="classpath" classname="org.testng.TestNG" failonerror="true">

<arg value="-listener"/>

<arg value="com.coveros.utilities.Listener"/>

</java>

</target>

Cucumber

I’ll go into setting up custom listeners in Cucumber as well, because Cucumber can actually use JUnit or TestNG as the underlying runner. As a result, I feel like that example fits in here pretty well. Cucumber is straightforward like default TestNG, but instead of putting the listener in your test class, put it in your test runner. Just add the annotation above the class declaration, and BAM! your custom listener will execute instead

package com.coveros;

@CucumberOptions(...)

@Listeners({com.coveros.utilities.Listener.class})

public class MyTestsRunner extends AbstractTestNGCucumberTests {

}

Final Thoughts

I’ve described a few different ways we can use our custom listener, and how to utilize it. There are so many different opportunities out there, and we’ve only just scratched the surface. My biggest suggestion is to review the current listener of your testing framework, and see what capabilities exist. Then figure out what you can/want to implement.

As always, leave comments below, and happy testing!

One thought to “Custom Framework Listeners”

Great info! @Listeners is a TestNG annotion, correct? What would you suggest for a JUnit project? I have seen JUnit listeners used with custom runners but I think that does not allow you to have start/stop Step methods. Also, naming a custom plugin in CucumberOptions gives me an Unrecognized Plugin error at runtime in my JUnit project when I implement a Cucumber ConcurrentEventListener.

Thanks!